Introduction

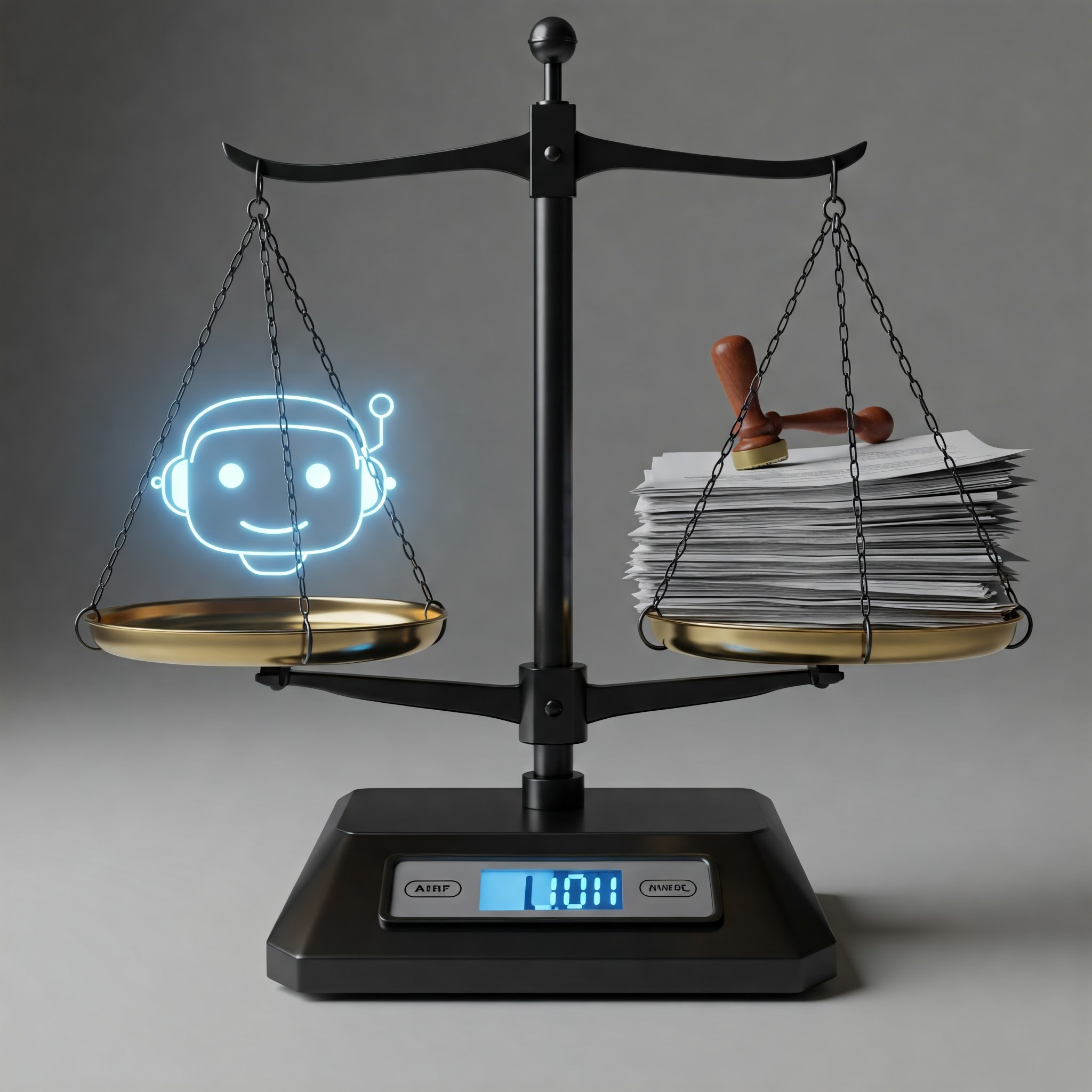

AI chatbots have become a staple of digital interaction, helping users with everything from customer service to content creation. However, as their popularity grows, so does legal scrutiny. Companies deploying AI chatbots are now encountering complex legal challenges ranging from data privacy and intellectual property rights to misinformation and defamation.

The Rise of Chatbots and Legal Grey Areas

Chatbots like ChatGPT, Google Bard, and Meta’s LLaMA are being integrated into applications at an unprecedented pace. Yet, legal frameworks have not fully caught up with the technology. These AI tools operate across jurisdictions, pulling from vast datasets that may include copyrighted or sensitive content. This creates murky waters when it comes to liability.

For instance, if a chatbot generates false information that damages someone’s reputation, who is responsible? Is it the developer, the company using the bot, or the user who prompted the response?

Privacy Concerns and Data Usage

One of the most significant legal concerns surrounding AI chatbots is data privacy. Chatbots often process personal information, either intentionally or unintentionally. When users share details in a chat, that data could be stored, analyzed, or even leaked if proper security measures are not in place.

Governments around the world, including the European Union with its General Data Protection Regulation (GDPR) and California’s Consumer Privacy Act (CCPA), are beginning to demand stricter controls. Companies must now ensure transparency in how chatbot data is collected, stored, and used—or risk heavy penalties.

Intellectual Property and Copyright Issues

Another legal minefield is intellectual property. AI chatbots are trained on massive datasets scraped from the internet. These datasets can include copyrighted articles, artworks, and even proprietary code. When a chatbot produces output that closely resembles copyrighted material, it can spark legal disputes.

Artists, writers, and software developers have started pushing back, arguing that AI models infringe on their intellectual property rights. Courts are still in the early stages of handling such cases, but the outcomes will set important precedents.

Defamation and Misinformation

AI chatbots can also unintentionally spread false or harmful information. For example, a chatbot could generate a statement implying criminal activity by a public figure based on inaccurate data. If this content goes public, it may lead to defamation lawsuits.

Misinformation generated by AI also raises ethical questions. When bots are used to generate news articles or educational content, even a slight inaccuracy can have serious consequences. Policymakers are now debating how to regulate AI-generated content without stifling innovation.

Jurisdictional Challenges

Since chatbots are accessible across borders, jurisdictional issues add another layer of complexity. Different countries have different laws around data protection, speech, and liability. A chatbot legally permissible in one country may be illegal in another, complicating compliance efforts for global tech companies.

Steps Toward Regulation and Compliance

Some solutions are beginning to emerge:

- Transparency Reports: Tech firms are being encouraged or required to publish how their chatbots operate, including training data and moderation policies.

- Human Oversight: Ensuring that humans review critical outputs can reduce the risk of legal violations.

- Opt-In Data Collection: Clearly informing users about data use and giving them control can align with global privacy standards.

Conclusion

As AI chatbots become more advanced and integrated into everyday platforms, the legal landscape will continue to evolve. Companies must proactively address privacy, copyright, and misinformation concerns or risk facing costly lawsuits and reputational damage. By fostering transparency, enhancing data protection, and respecting intellectual property, organizations can responsibly navigate the growing legal challenges of AI chatbot deployment.